Test Aspect¶ ⧉

Testing is an essential part of a language designer's work.

— MPS documentation

- Testing in MPS: What and Why ⧉ (languageengineering.io's blog)

- The Language Testing Triangle ⧉ (Markus Voelter's blog)

General¶

Fore more information about testing in general, read MPS Internals | Testing.

Can you add an annotation to skip tests like it usually works with JUnit?

It isn't supported. You must comment out the test case or add the @Ignore attribute in the reflective editor. The only official statement supporting this functionality is the assert statement of KernelF (AssertTestItem ⧉).

What's the TestInfo node used for?

How to create a TestInfo node for your tests ⧉ (Specific Languages' blog)

How do node tests work?

How do node tests work? ⧉ (Specific Languages' blog)

Can you invoke an action by ID?

Yes, use the statement invoke action by id.

How do you enter multiple words when using the type statement in an editor test?

Enter them as one long word without spaces in between, for example, publictransientclass.

How do you know if some MPS code is inside a test?

I am writing some code in MPS that is supposed to run if we're not running tests. How can I detect if I'm running a test?

- Consider mocking something out instead or ensuring that you are testing on a low-enough level.

- Here are two options if you need to do this:

contributed by: @abstraktor

How do I access the current project inside a node test case?

There is a project ⧉ expression that you should use.

Why does the node ID change during a node test?

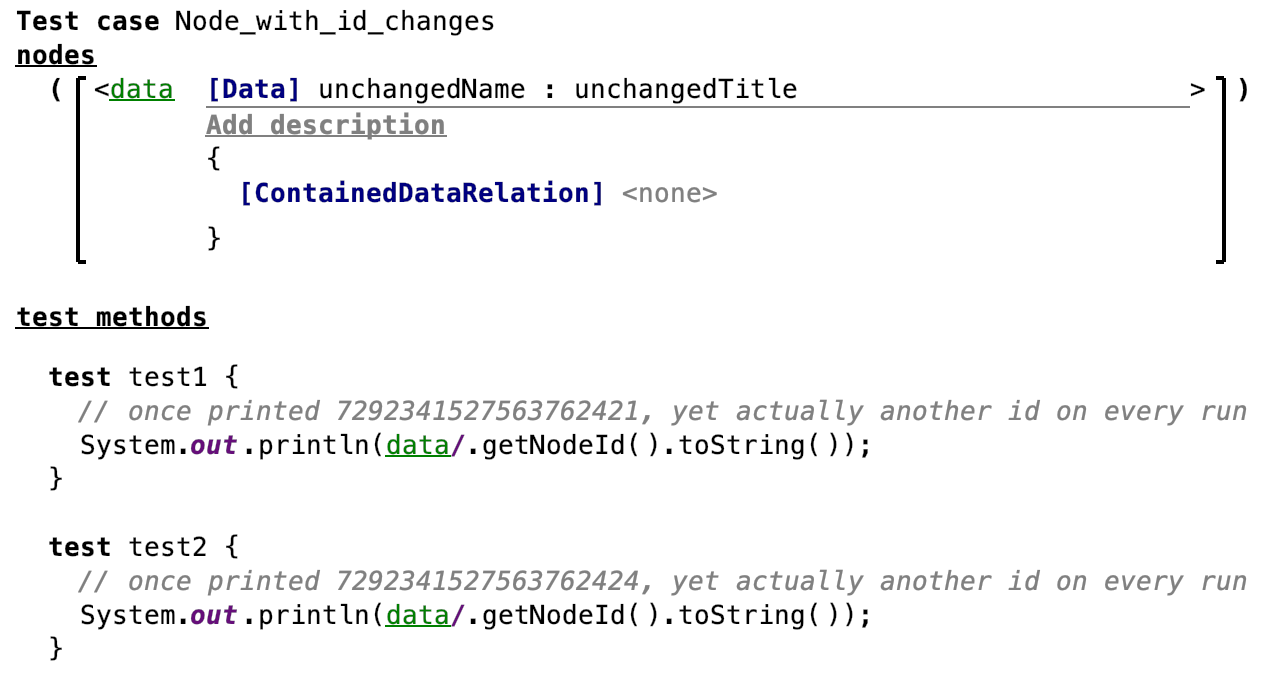

Given I have a node test case. My test case has a check node called data, and my test case has a test test1 which prints the node ID of data. My test case has a test test2 which prints the node ID of data. When I run the test, test1 and test2 print different node IDs.

Why is that?

Terms used:

- check node for these fixture nodes that you enter into the test case under nodes

- test case for the root node, the chunk that contains the tests

- tests for the test methods of which we have test1 and test2

- Each test case runs on a copy of its model.

MPS tries to keep tests reproducible and isolated even when being run in-process. For that, MPS copies the whole model into a temporary model. Modifications of one test case will then be invisible to the next test case since it will work on a new temporary model. This solution prevents test cases from interacting.

Running tests in a separate model ensures that they will never modify the original model (as long as you don't explicitly start acting on other models).

- Check nodes per test

A test case may have multiple tests, though. MPS also isolates single tests within the same test case. For that, the check nodes are copied once for each test. Each test may then act on its copy.

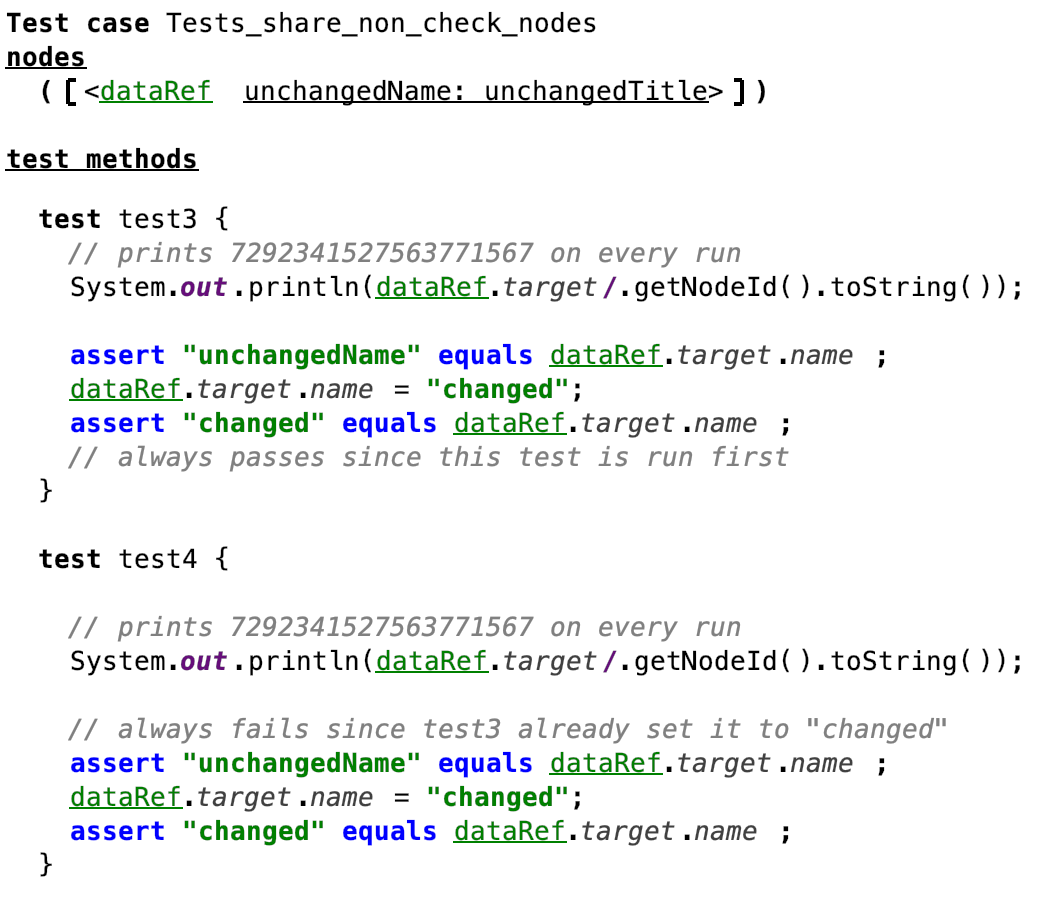

- All tests of a test case share their referenced nodes

To save memory, these check nodes all lie in the same model for each test case. References to other nodes outside the test case will only need to be copied once and shared by all tests of that test case. As a result, the IDs of check nodes change, and non-check-node IDs are the same as in the original model.

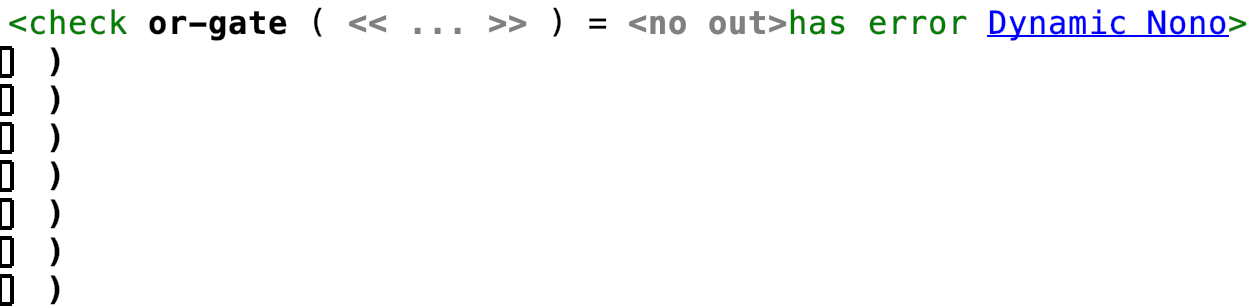

Consequently, multiple tests of the same test case are only partially isolated. In the following example, both tests assert and do the same, yet test3 passes while test4 fails. The data element is now located in a separate chunk outside the test case, and the check node references it. As a result, test4 is running red because test3 already modified the referenced node.

-

Kinds of tests

-

I checked this behavior in-depth for node tests.

- I think it is similar for editor tests.

- I am sure that this does not apply to baseLang-tests since they don't prepare a temporary model for you.

Practical effects of this

These are some rules of thumb that result from that:

- The tests of a test case may interact, so you should inline all modified nodes into the test case to be check nodes.

- When modifying many nodes in the model, consider writing a migration and migration test instead.

- Remember that the console and each test will output separate IDs for the conceptually same node. And they will change from run to run.

- It is easy to be confused by that and draw false conclusions, especially when stepping through tests.

- Whenever your code queries for the nodes of a model, be ready to see duplicates for each test (as in the dataRef example). You may test this by asserting that something is included or excluded instead of asserting true equality of the expected and actual lists.

- Another source for duplicates is if the test model imports itself.

- If you need full power on the temporary model, consider writing a Base Language test and creating your repository and model by hand. jetbrains.mps.smodel.TestModelFactory allows to do that, for example, the ModelListenerTest ⧉ uses that TestModelFactory. It is unfortunately not available as stubs, so you'll need to copy it to your project.

contributed by: @abstraktor

How to find out if a node is inside a test model?

SModelStereotype#isTestModel is the right method. The same approach can be used for other special models: SModelStereotype#isStubModel, SModelStereotype#isGeneratorModel. For aspect models, use model.isAspectModel(x) where x is a reference to the aspect like editor.

How to Test¶

This section deals with practical questions regarding testing. Testing should be a big part of the CI pipeline to ensure that changes don't introduce bugs and new feature work as intended. Not everything needs to be tested and manual testing can not fully be avoided. Still, every single test gives you confidence in your changes, so don't ignore this part of language development. Base Language and KernelF have support for test cases and assert statements.

How do you implement custom tests?

Implementing custom tests ⧉ (Specific Languages' blog)

How to set up a generator smoke test?

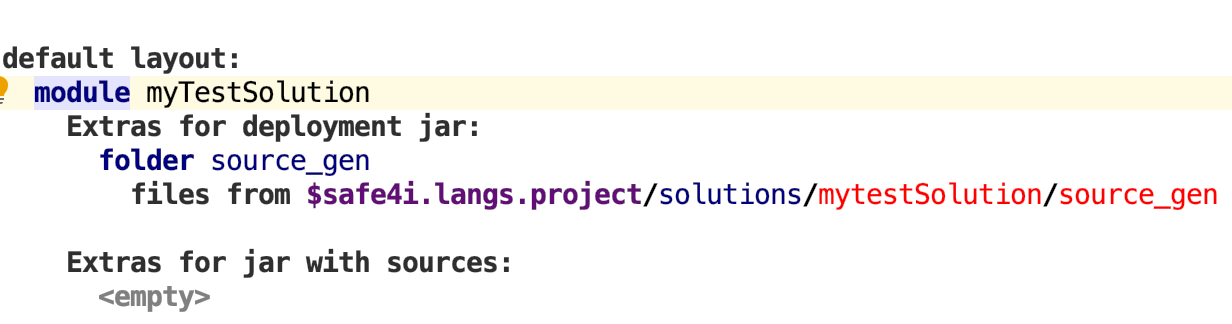

I want to write a generator smoke test. Therefore, I have some solutions with models (regular ones, not @test models) built from the command line, and the generators invoked are generating some .c files.

In addition, I also have a @test model in the same solution with some unit tests, which checks if the output directories of these models contain any generated files. I don't invoke the generation of the models programmatically but rely on the Ant task which generates the solution.

Unfortunately, this setup always leads to broken tests. The unit tests are executed before the models (which are built during the CI run) and though the test fails.

asked by: @arimer

When the tests work fine from within the IDE, the problem is that when your tests are executed, they run from the JARs, not the sources. The generator output location points into the jar file that the tests are executed from and not to the real source location.

You could change the packaging to include the source_gen folder for your specific test solution.

Make this change to the default layout of your build model:

In this case, you would need to detect if you are running from sources or from a JAR in CI and change the location where you look for the generated files.

In this case, you need to detect if you are running from sources or a JAR in CI and change the location where you look for the generated files. You can calculate a relative path for the test solution containing the packed sources.

The easier solution is to place the tests in a separate solution and then invoke the make process for the solution that contains your input programmatically, so you can assert over the output. An example implementation of how the make process is invoked can be found in the mbeddr-c part ⧉.

answered by: @coolya

Is it recommended to write editor tests?

Most of the time they are difficult to maintain and break easily (e.g. a cell ID changes). It's better to avoid them or create other types of tests. For example, you can call behavior methods directly to simulate creating/modifying nodes. If you want to test if you could create a node, having a scope test that checks references can also be sufficient. The biggest trick is keeping those editor tests short to make them easier to fix when they fail. Another problem with editor tests is that they don't show the difference graphically (MPS-38305 ⧉).

How to write editor tests for context assistants?

I need to unit test context assistants ⧉, ideally with an EditorTestCase, but it is not supported out of the box.

This snippet allows to automatically test the context assistant in the code section of the EditorTestCase:

Note:

- "Item Name" must be replaced with the name of the item as shown in the editor

- "_this" is a concept from internalBaseLanguage DSL.

contributed by: @cmoine

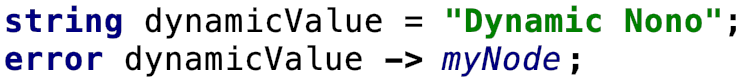

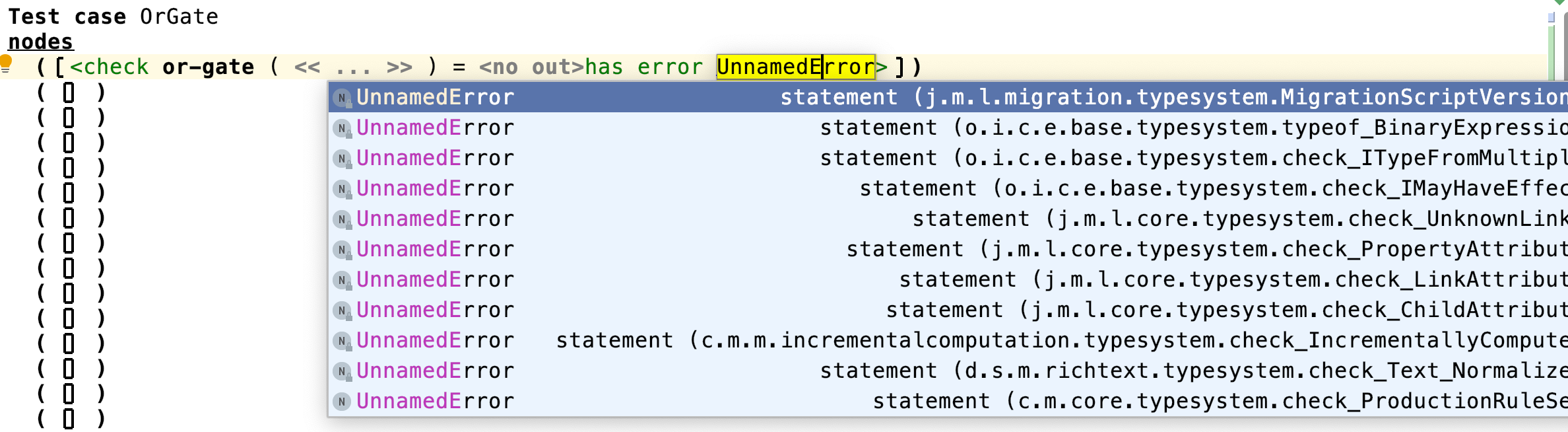

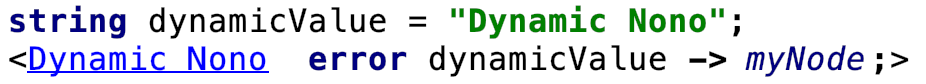

How do you name check errors with dynamic messages?

When I make the error text dynamic:

Then my error is named

UnnamedError, and I have a hard time selecting the right one:How do I name errors with dynamic messages?

- Go to your error statement in your checking rule.

- Import the language jetbrains.mps.lang.test.

- Run the intention Add Message Annotation.

- Type a nice name.

Note that this does not work for errors that result from constraints.

contributed by: @abstraktor

What's the best way to test the contents of the code completion/substitution menu for a given cursor position using a NodesTestCase or an EditorTestCase?

Example for an EditorTestCase:

How do you test with two-step deletion enabled?

Example:

How do you test with typing over existing text enabled?

Example:

How do you test that you use a language?

Example:

How do you access the error cells in the inspector?

How do you click on anything in a test?

You can execute intentions and actions programmatically. You can use the press mouse(x,y) and release mouse statements for UI elements like buttons.

How can you test that code completion works?

You can use a scope test to check if all items are visible in the menu. To check the number of actions in the menu, call:

How do I run some checks of the model checker?

Example code:

How do I run unit tests through a run configuration?

Example code:

Can I test the actions menu?

For internal tests in MPS, there is the following code:

MenuLoadingUtils.java and WithExecutableAction ⧉ are not public, so they have to be created manually.

How can I run a Base Language test (BTestCase ⧉) in an MPS environment?

Add the MPSLaunch annotation to the test case and extend the class EnvironmentAwareTestCase ⧉.

Example:

Troubleshooting¶

Some of the issues with testing have to do with the way tests are executed. Tests can be executed in the same MPS instance or a new instance. In the latter case, a complete new environment is started. Some editor test in MPS Extensions ⧉ can only be executed in a new instance. This is probably also true for other type of tests.

Additionally, the tests are executed in temporary models which results in changed node IDs and root nodes become normal nodes with parents in tests. Avoid adapting your code to work in the test models and avoid adding checks to see if you are in a test model.

Tests aren't running at all

A test info node ⧉ has to be added to the model of the tests so that the tests can find the project's path. The project path also has to be set in this node. Ensure you create variables in this path in Preferences → Appearance & Behavior → Path Variables (TestInfo | MPS ⧉).

Tests have a long warm-up time and run slowly.

When running the tests from a run configuration, enable Execute in the same process in the configuration settings.

Check the box Allow parallel run (Running the tests | MPS ⧉).

The tests only work in MPS and not on the command line

Why does my test fail when run from Ant but not when run from MPS? ⧉ (Specific Languages' blog). Simpler answer: If you have a different behaviour on CI than locally, it may be, because you have some old/broken (generated/compiled) artifacts on your machine, that either cause things to work out though the CI breaks or vice versa. Try a git clean (git clean -xfd) and rebuild the project locally.

Why does the test execution fail with "Test project '$…' is not opened. Aborted"?

It happens because you didn't set the variable in the TestInfo. Go to File → Settings → Path Variables and create an entry for your variable with a path to the project location on your hard drive.

java.lang.IllegalStateException: The showAndGet() method is for modal dialogs only.

One of the reasons why this message pops up is that a dialog should be displayed in a headless environment like a build server. There is only one way to avoid this exception than not showing the dialog. According to the IntelliJ documentation, it can also happen when the dialog is not shown on the EDT thread or the dialog is not modal.

Which temporal model should you use for a node test setup?

TempModuleOptions#nonReloadableModule is provisional code. Maybe TempModuleOptions#forDefaultModule would be the better option. The difference with the first option is that the module is invisible to the user. It shouldn't make a difference since it is discarded after the test anyway.